In the last 12 months, AI agents have become a critical investment most organizations are investigating. Organizations are trying to rationalize rapid innovation at scale with security and governance.

Microsoft is no exception and as we sought to build an employee self-service agent, we learned a great deal about how to securely build, deploy, and measure the success of an enterprise-wide agent.

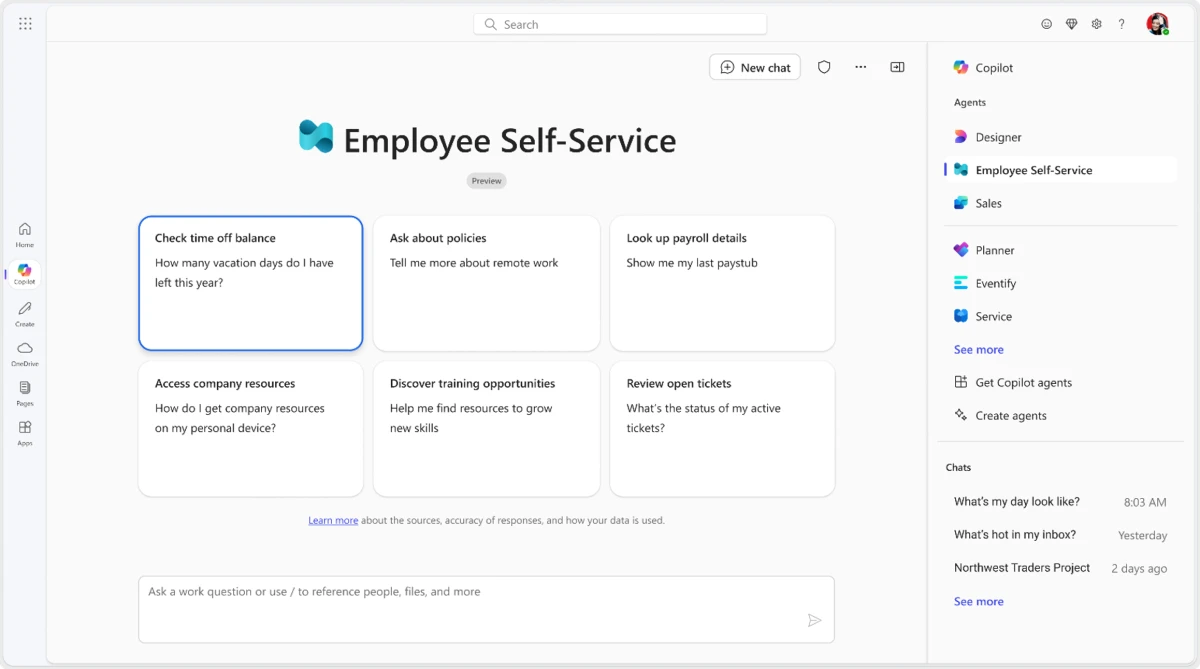

In this blog post, you’ll understand our best practices for deploying enterprise-wide agents with a focus on our own development and deployment of the Employee Self-Service (ESS) agent in Microsoft Copilot Studio to Microsoft 365 Copilot respectively.

Our journey to a simple enterprise-wide employee self-service agent began back in 2013 with the launch of our HRWeb SharePoint site as a centralized repository of critical HR policy and benefits information. That led us to eventually build a chatbot in 2021 to reduce HR inquiry escalation to our HR policy teams.

Now with generative AI, we’ve revolutionized employee support by building the ESS agent to support HR inquiries, dramatically enhancing efficiency and the employee experience.

Employees receive accurate, context-driven responses and automated actions—from policy lookups to managing time-off requests—significantly reducing HR costs and enabling greater employee productivity and satisfaction.

Following a successful pilot, Microsoft Digital expanded the ESS agent globally across key markets, including the United States, the United Kingdom, and India.

The journey of creating, securing, and rolling out the ESS agent enterprise-wide revealed five key considerations when building and deploying an enterprise-wide agent:

- Plan with purpose: Define why, success, and the challenges you’re solving

- Select and secure optimal knowledge sources for your AI agent

- Ensure security, compliance, and responsible AI in enterprise-wide agent deployments

- Build and test the pilot agent with target audiences

- Scale enterprise-wide adoption and measure impact

Plan with purpose: Define why, success, and the challenges you’re solving

Before you write a single line of code or plan the agent design, it’s critical to define your agent’s purpose. Identify the specific challenges your organization is trying to address. Ask questions such as:

- What pain points exist in the current support experience?

- Who are the end users, and what does their ideal interaction look like?

- How can generative AI improve the resolution process compared to legacy chatbots?

To learn how Microsoft built the agent prompt and responses, check out our Copilot Studio Implementation Guide blog post.

At Microsoft, we recognized that our existing chatbot solution—while effective—wasn’t optimal, as it required developers to anticipate every possible user question and compelled employees to use exact verbiage to find HR and benefits information, as well as IT-related queries.

To significantly improve this experience, we envisioned an enterprise-wide AI agent using generative answers, orchestration, and actionable capabilities, enabling higher resolution rates and delivering a more intuitive, efficient support experience for our employees.

Below is an example of goals to outline when designing your agent:

Select and secure optimal knowledge sources for your AI agent

Identify core data that optimally solves user challenges

Once you’ve determined the agent’s purpose, the next critical step is identifying the knowledge sources and data on which you’ll build your agent. For most organizations, especially in your initial enterprise-wide agent development and deployment, you’ll want to restrict yourself to the most essential knowledge sources for a few reasons:

- These knowledge sources should be among the most secure in your organization.

- You’ll typically employ some form of role-based access controls (RBACs) to prevent data proliferation.

- They should provide the breadth of knowledge necessary to effectively serve your initial target audience(s) and should be accurate and up to date.

At Microsoft, our journey in HR self-service began in 2013 with HRWeb, our SharePoint intranet, which provided extensive coverage and robust security through built-in role-based access controls. Using HRWeb as the foundation for our generative AI agent allowed us to efficiently deliver comprehensive, secure answers with minimal configuration.

However, recognizing that even high-quality data can become outdated, we proactively analyzed common user searches and frequent support tickets to curate a targeted set of essential questions and answers. This practice highlights the importance of regular data reviews and cleanup to continually optimize your agent’s effectiveness.

Implement data loss prevention policies for knowledge and data connectors

At Microsoft, we recommend establishing three separate environments—development, test, and production—in Copilot Studio, aligned with software development lifecycle best practices.

Using Copilot Studio alongside Microsoft Power Platform, organizations can enforce consistent routing rules and data loss prevention (DLP) policies across all environments, specifically targeting knowledge sources, connectors, and API endpoints.

This approach ensures secure, compliant data interactions, which at Microsoft is mandatory whenever an agent performs actions beyond simple personal retrieval. Learn more about implementing DLP policies in Copilot Studio.

Ensure security, compliance, and responsible AI in enterprise-wide agent deployments

After building your pilot agent in the development environment, it’s crucial to perform thorough security, compliance, privacy, responsible AI, and accessibility assessments before moving into testing and production environments.

At Microsoft, this begins with a comprehensive software development lifecycle (SDL) assessment, where development teams submit detailed documentation to our internal security council, closely monitoring data usage and enforcing stringent security requirements. Key components of the SDL evaluation include:

- Service Tree Metadata

- Confirmation that no production data resides in dev/test environments

- Verification of data encryption at rest and in transit

- Completed threat modeling analysis

- Documentation of secure development standards

- Verified auditing and logging for agent interactions and chat transcripts

Following the SDL assessment, we perform rigorous accessibility tests aligned to Microsoft’s standards and comprehensive responsible AI evaluations by subject matter experts to ensure responses are accurate, consistent, and inclusive. Additionally, proactive “red team” security testing is recommended to identify and mitigate potential vulnerabilities.

Agents then transition into testing through Microsoft Power Platform pipelines in Copilot Studio, making compliance management especially critical. At Microsoft, we perform additional Tenant Trust Evaluations, including detailed documentation, comprehensive security questionnaires, and formal IT council reviews, to ensure readiness for broader deployment. For sensitive scenarios involving personal data or employee monitoring, we also conduct Works Council evaluations to maintain strict adherence to privacy standards.

We repeat these rigorous assessments whenever introducing new knowledge sources, connectors, or APIs. For example, during the integration of our HRWeb-based agent with our IT Helpdesk agent—creating the unified Employee Self-Service (ESS) agent—these evaluations helped manage data proliferation risks, enabling the ESS solution to remain secure and compliant throughout its lifecycle.

Regardless of your organization’s specific processes, documenting each step thoroughly, maintaining transparency, and actively involving your IT and Center of Excellence teams is essential for effective governance and robust security oversight.

Build and test the pilot agent with target audiences

Once appropriate data-loss prevention measures are approved, your organization can begin building the AI agent. For scenarios involving straightforward generative orchestration—primarily reading and writing—development is streamlined.

However, when handling sensitive data requiring consistent, precise responses, we recommend using the full Copilot Studio instead of simpler tools like Agent Builder in Microsoft 365 Copilot or Microsoft 365 Copilot Chat.

This approach allows the creation of customized response topics, triggered by specific phrases, enabling compliance and accuracy.

At Microsoft, we transitioned to Copilot Studio to precisely handle sensitive HR inquiries, avoiding non-compliant or inconsistent responses for complex questions such as those related to employee compensation comparisons.

Select pilot audience: Select your ideal early adopters for initial feedback

Choosing the right pilot audience and timing is as critical as selecting high-quality data and securing your agent. We recommend initially deploying your AI agent to a focused group—around 100 users—and conducting A/B testing against existing solutions to measure its impact.

Gathering targeted feedback from these early adopters allows for rapid iteration, refinement, and informed expansion of your data sources and agent capabilities.

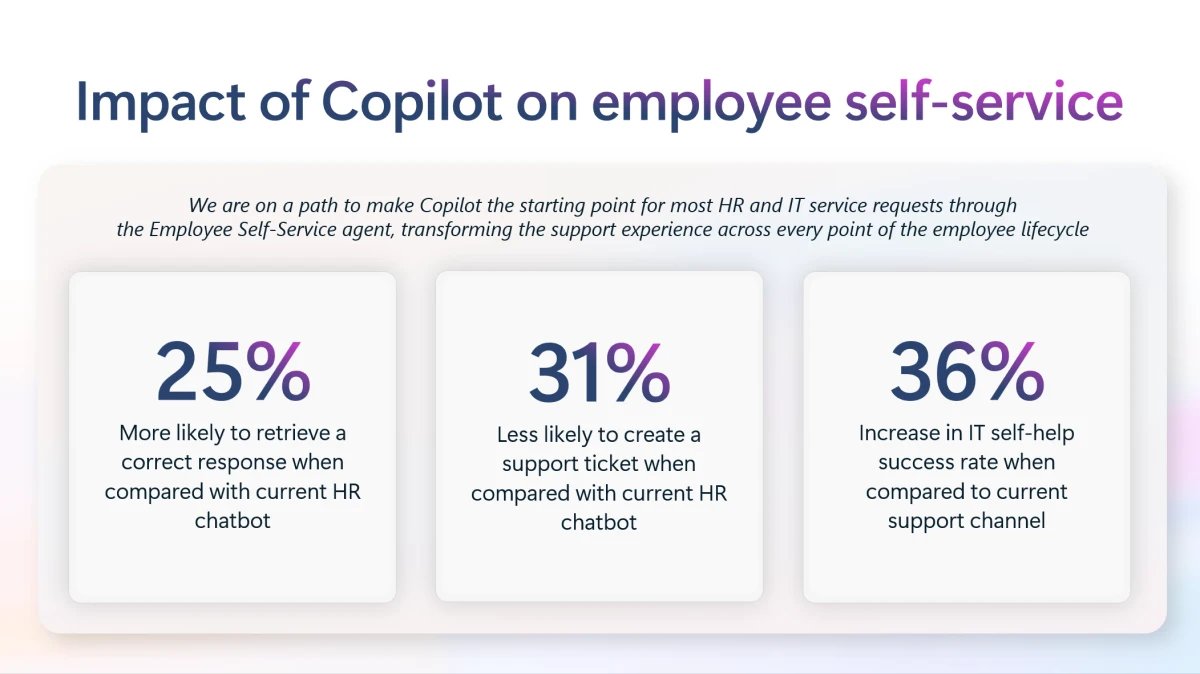

At Microsoft, we piloted our ESS agent with 100 United Kingdom employees, using the maturity of our HRWeb data in that region to maximize initial impact and insights.

Scale enterprise-wide adoption and measure impact

Rollout at scale: Leverage pilot feedback to refine agent and scale adoption

The initial ESS pilot demonstrated strong results, but scaling the agent enterprise-wide required expanding beyond our primary HRWeb SharePoint site, as critical HR data was distributed across more than 100 different line-of-business (LOB) systems.

Recognizing it wasn’t feasible to integrate all sources simultaneously, we analyzed two years of HR interactions—including tickets, searches, and chatbot conversations—to map data locations, prioritize essential sources, and determine rapid integration strategies.

We focused first on systems easily integrated through existing Microsoft Power Platform connectors and rapidly established corresponding DLP policies. Additionally, we assessed whether certain data sources could be consolidated under the primary HR SharePoint site or other high-value repositories to streamline management.

For maximum organizational impact, Microsoft strategically prioritized deployment in key regions—initially the United States, the United Kingdom, and India—and focused on high-impact teams, such as our sales organization (MCAPS), to showcase the agent externally during customer engagements.

Large enterprises considering similar deployments should anticipate and carefully evaluate these trade-offs to align their AI agent rollout effectively with planned timelines and strategic goals.

Measure and report: Use insights to further refine agent and track ROI

The last stage is where you measure and report on the success of the agent to your team and leadership. Thus, generating the insights needed to refine your agent and demonstrating the ROI to secure continued leadership investment.

At Microsoft, we’re still refining our measurement and reporting strategy for the ESS agent, but some of the key metrics we recommend tracking for any agent are the number of sessions, engagement rates, customer satisfaction (CSAT) scores, resolution rates, abandonment rates, and knowledge source accuracy rates—all of which are available to development teams out of the box in Copilot Studio Analytics.

Final takeaways about enterprise-wide agents

Enterprise-wide deployment can be highly intensive on the front-end but it’s critical that your organization invests most heavily in the planning, data source selection, and security phases.

These are critical to ensure that your organization has the appropriate agent design in mind from the outset that can optimally impact your target key performance indicators (KPIs), data sources that are most effective to solve end user challenges and drive the greatest ROI, and security practices that thoroughly account for any potential gaps and lock down any possibilities for data proliferation.

This blog post is just step one in helping your organization roll out an enterprise-wide agent. In spring 2025 we’re making our ESS agent generally available as a product to all Microsoft customers so you can use not only our learnings in this blog post, but the efforts of our development teams to help you stand up your own ESS agent.

To learn more about the Employee Self-Service Agent read our Inside Track blog from Microsoft Ignite 2024.